Introduction to MCP Protocol #

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP as a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

Using MCP in Agentic RAG Systems #

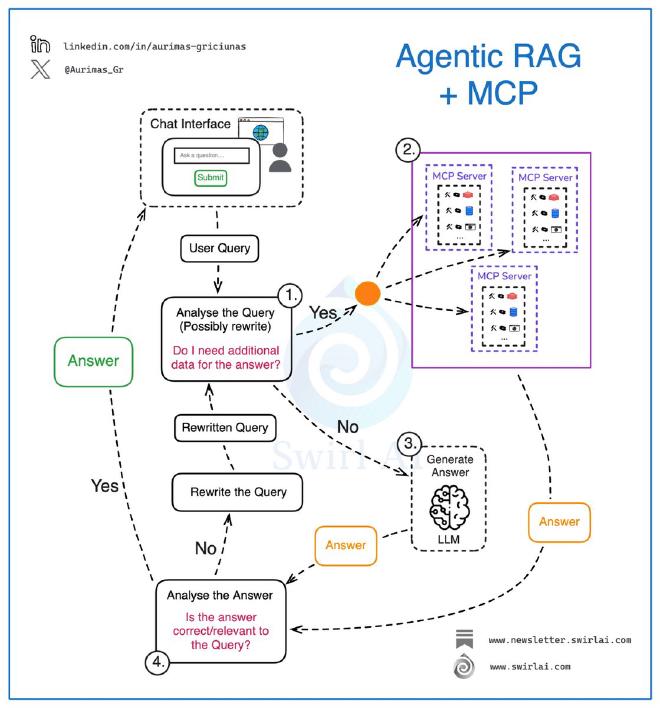

The image below clearly demonstrates how to use MCP in Agentic RAG systems. See the original source link at the end of this article.

Step 1: Determine if Additional Data is Needed #

When a user submits an information query through the chat interface, the Agent first analyzes the user’s query intent (in some cases, the user’s input may be unclear and needs to be rewritten into a more structured query intent) and determines whether additional data is needed to answer the user’s question.

If no external data is needed to answer the user’s question, the process skips directly to Step 3 to generate an answer.

Step 2: Retrieve Various External Data via MCP #

If additional data is needed to answer the user’s question, the Agent initiates query requests to data sources (such as databases, search engines, file systems, etc.) through the MCP protocol to retrieve data. This is where the advantages of the MCP protocol become fully apparent.

- ✅ Each data domain can manage its own MCP server and expose specific rules for data usage.

- ✅ Each data domain can ensure data security and compliance at the server level.

- ✅ New data domains can be easily added to the MCP server pool in a standardized way without rewriting the Agent, enabling decoupled development of the system in terms of procedural, episodic, and semantic memory.

- ✅ Platform developers can open their data to external users in a standardized way, making data access on the web simple and direct.

- ✅ AI engineers can focus on designing and optimizing the structure of the Agent without worrying about the details of underlying data connections.

Step 3: Generate Answers #

Generate answers through large language models.

Step 4: Analyze Answers #

Before returning the answer to the user, the Agent needs to first analyze the quality of the answer, its correctness, and its relevance to the question. If there are no issues, the answer is returned to the chat interface on the frontend.

If there are issues, the query requirement is rewritten to provide a more precise description, and the process returns to Step 1 to start again.

Summary #

As you can see, this process is similar to how humans conduct research: ask questions –> collect materials –> reach conclusions –> analyze conclusions –> ask new questions –> repeat the above steps.